Chapter 1

Flashcards

Quiz

Chapter 2

Case study

CASE STUDY: Does colour constancy exist?

DownloadColour constancy concerns our ability to detect the colour of an object despite changes in illumination. For example, when looking at the sea on your morning stroll along the beach, you see it as blue, and it seems the same at sunset. The light reflected from the sea that enters the eye has a different wavelength at sunset, yet we perceive the colour to be the same. Examples like this led us to believe that a mechanism in the visual system carries out calculations on the surface colour of objects so that we are able to perceive an object’s single colour in different lighting conditions. However, Foster (2003) questions the evidence for this assertion, arguing that it remains unproven that such a mechanism exists.

Many psychologists assume that colour constancy is a basic feature of visual processing systems, especially since it is associated with biological importance, such as being able to detect ripe from unripe fruit. Colour constancy allows for a stable visual world.

Colour perception depends on the reception of the wavelengths of light being reflected from an object. However, the wavelength entering the eye is a combination of the colour of the light and the properties of the object itself – thus, “a red paper in white light can look the same as a white paper in red light” (Foster, 2003, p. 1). The task of the visual system is to calculate the colour of the object by controlling for, or eliminating, the colour of the light. When several different coloured objects are present this task may be easier since it is easier to eliminate the colour of the light, given cues from more than one object. This is the basis of Land’s retinex colour-constancy schemes. However, measuring perceived colour may not be without its problems.

One method is colour naming, in which participants are required to select a colour name to refer to the colour perceived. It is then possible to record responses to a number of objects in different illuminations. One problem is that this is not very accurate as a method of measurement: participants often use the same colour name (e.g., blue) for different shades (of blue), even when the illumination is constant. It has been estimated that there are more than 2 million distinctly perceivable colours, and this far exceeds the number of colour names that people are willing to use.

A second method is to get participants to match two colours by adjusting the red, green and blue of the second colour using colour controls. So, the same coloured surface is presented under different illuminations and the participant adjusts the colour of the second surface to match the first “as if it were ‘cut from the same piece of paper’” (Foster, 2003, p. 2). Under this method and with computer-simulated scenes, colour matching can be as accurate as 79% to 87%. The problem with this method is that it only guarantees matching two items as equivalent, it does not measure perceived colour. This is because participants need merely to judge how the colour of one surface relates to the colour of one or more other surfaces, and hence this method does not measure colour constancy.

A third method involves participants adjusting the colour of a surface within a scene so that it appears white (achromatic adjustment). The method records their perception of the colour of the illuminant, since that is what they are attempting to adjust for. Performance can reach up to 83% of the real unbiased colour (white). However, although this has been used to measure colour constancy, Foster argues that it cannot do so, because in order to complete the task participants can make use of the hue of surrounding colours in the scene. In order to achieve colour constancy, the illuminant needs to be identified – however, judgements based on relative brightness say nothing about the nature of the illuminant.

Judgements of colour can be based on numerous sources of information. One common assumption is that the object with the brightest surface is white, and once this is made other colours are perceived relative to that surface. However, there are many other ways, and the fact that many other ways are used implies that there may not be a single mechanism that calculates colour constancy.

Because of the limitations in measuring perceived colour, psychologists have no way of knowing whether true colour constancy exists.

Reference

Foster, D.H. (2003). Does colour constancy exist? Trends in Cognitive Sciences, 7(10), 439–443.

Flashcards

Quiz

Chapter 3

Case studies

CASE STUDY: Bruce and Young’s (1986) model of face processing

Vuilleumier et al. (2005): Effects of perceived mutual gaze and gender on face processing and recognition memory

DownloadBruce and Young (1986) described a model of face processing in which different aspects of face processing are carried out independently. This modular theory has been questioned in the literature, although there is supporting evidence for a number of these independent processes. The study by Vuilleumier et al. (2005) attempts to examine the effects of an individual’s gaze on the ability to identify features of the face, such as the person’s sex, and on the ability to recognise the face afterwards.

The two main routes in the Bruce and Young model are processing an individual face for identity recognition (knowing who they are) and processing facial expression and features such as the sex, age and race of the face. If the two routes are independent, then it should be possible to achieve one type of processing and fail at the other. This has been found with prosopagnosic patients, who can recognise the emotions in a face but not the identity of the face itself. Also, patients impaired at recognising an expression have been found to be able to recognise the face itself.

One important finding of Vuilleumier et al.’s (2005) study is that recognition of a set of new faces is influenced by facial expressiveness (the direction of gaze) and by features such as the sex of the face. This finding implies that the two routes are not completely independent and there is likely to be a good deal of interaction in processing between the two routes.

Perceived gaze is an interesting subject to study since it can convey important social information, such as the intentions and interests of others. These can further imply that an encounter is likely, and whether it might be positive (e.g., attraction) or negative (e.g., threat). Gender judgement is also something that individuals do rapidly and with a good deal of accuracy, even when cues such as facial hair, make-up and so on are removed from the decision process.

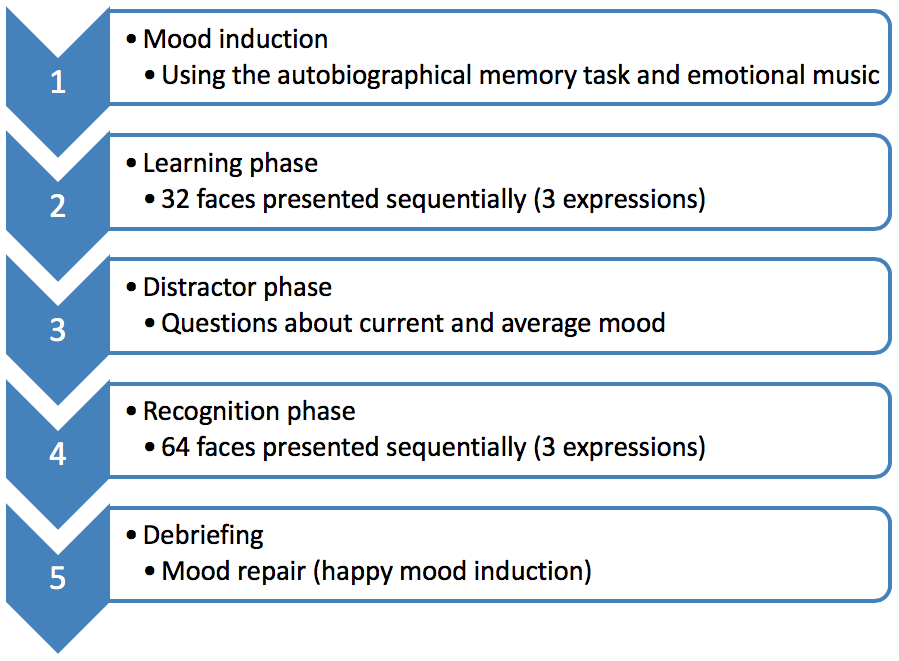

In the Vuilleumier et al. (2005) study there were two tasks. The participant was shown a number of faces and had to decide, by pressing one of two keys, whether the face was a man or a woman. The second task was a recognition task, in which the participant had to say whether each face was one of those used in the first task or not. The faces were presented either face-on or to the side, and with eyes looking at the viewer or looking away.

The results showed that the direction of gaze significantly affected the judgement of the sex of the face. Participants were quicker when the eyes were averted than when they were looking at the viewer. Also, of interest was that this effect was especially so when the face was of the opposite sex to the participant. The results of the recognition task show that it was easier for faces looking directly at the viewer than for side-on faces and when the gaze was averted, and also especially for the opposite sex.

The conclusion is that perceived eye contact can interact with face processing and face recognition, even when gaze direction is not relevant to the task.

References

Bruce, V., & Young, A.W. (1986). Understanding face recognition. British Journal of Psychology, 77: 305–327.

Vuilleumier, P., George, N., Lister, V., Armony, J. & Driver, J. (2005). Effects of perceived mutual gaze and gender on face processing and recognition memory. Visual Cognition, 12 (1): 85–101.

CASE STUDY: Bruce and Young’s (1986) model of face processing

Armann et al. (2015): A familiarity disadvantage for remembering specific images of faces

DownloadBruce and Young’s (1986) model of face processing takes the view that aspects of facial processing are somewhat independent. However, there is a growing body of research to suggest that this might not be the case. The study by Armann, Jenkins and Burton (2015) aimed to test the assumption that unfamiliar face processing is image-bound, in contrast to abstractive familiar face processing.

Pictorial information dominates one’s representation for an unfamiliar face, making it easy to recognise when a specific image has been seen before. However, when we see a familiar face, familiarity information (personal information), will automatically be generated. This occurs at the expense of pictorial information. For example, participants might find themselves thinking “Yes, I know I saw President Trump before, but I’m not sure if I saw that particular photo of him”.

In previous recognition memory experiments of faces, the recognition phase often confounds the recognition of the photo and the person. In many of these experiments, the same photo is used at the learning and test phases. One important finding of Armann et al.’s (2015) study is that viewers are more accurate when remembering that they saw “this person” rather than “this picture”, when the face is familiar.

In Armann et al.’s (2015) study, there were two experiments. In the first experiment, participants were presented with a memory task whereby they were shown a set of faces during the study phase and were instructed to remember the people shown. In the recognition phase (test phase), participants were shown a further set of faces. For each image, half the participants were asked, “did you see this person before?”and the other group were asked, “did you see this exact picture before?”. Everything was the same for the second experiment, however, one difference was that participants were required to remember the exact image during the study phase.

Results from the first experiment aligned with previous literature, concluding that people are better at recognising familiar rather unfamiliar faces. However, the researchers concluded that when the memory task is image-specific, individuals are at a disadvantage when recognising familiar faces. This would suggest that coding of familiar faces is less reliant on image-specific properties than coding of unfamiliar faces. Furthermore, when presented with the same picture test item, results inferred an advantage for unfamiliar faces in the picture task (second experiment).

The key differences between familiar and unfamiliar face-recognition memory are unaffected by instruction: unfamiliar faces are encoded more pictorially and familiar faces more abstractly, regardless of instruction. Therefore, the researchers concluded that we rely on more abstract representations for familiar than unfamiliar faces, which results in poorer coding of pictorial information in images of people we know.

References

Armann, R.G.M., Jenkins, R. & Burton, A.M. (2016). A familiarity disadvantage for remembering specific images of faces. Journal of Experimental Psychology: Human Perception and Performance, 42 (4), 571–580.

Bruce, V. & Young, A.W. (1986). Understanding face recognition. British Journal of Psychology, 77: 305–327.

Research activity

RESEARCH ACTIVITY: Mental imagery

DownloadAccording to Kosslyn (1980, 1994), the mechanisms used to generate mental images are the same as those used in visual perception. The main difference between visual perception and mental imagery is the amount of detail in vision. Another difference is that we know that mental images are often created deliberately. If mental images do indeed operate in the same way as visual images, then one should expect that an imagined task should take about the same time as the task itself when carried out.

The tasks

First, think about the garden in the place where you live (or someone else’s that you know very well). Measure the time it takes to imagine walking from one end of the garden to the other. Don’t run or speed up the task but imagine yourself walking at a normal steady pace.

Next, think of an open space you know quite well, such as a local playing field. Measure the time it takes to imagine yourself walking in this field for about the same distance as you “walked” in the first task. Use one landmark in the field as the signal that you have covered the same distance as in the first task.

The third task is to measure the actual time it takes you to (physically) walk the length of the garden and back, and the fourth task is to measure the time it takes for you to walk the same distance in the field.

You should have four measurements. We will call them:

- Garden Imagery – the time taken for an imaginary walk down the garden.

- Garden Actual – the time taken for a real walk down the garden.

- Field Imagery – the time taken for an imaginary walk in a field.

- Field Actual – the time taken for a real walk in a field.

Dealing with the data

- Calculate the ratio: Garden Imagery divided by Garden Actual. For example, suppose it took 40 seconds to image the walk and 60 seconds to do the walk, then you calculate 40/60, which is 0.667. Let’s call this value the Garden Ratio.

- Calculate the ratio: Field Imagery divided by Field Actual. Let’s call this value the Field Ratio.

Kosslyn’s prediction

Since mental imagery and visual imagery exploit the same mechanisms, there are two predictions:

- Both values should be close to 1.

- The actual values of the Garden Ratio and the Field Ratio should be very similar to each other.

The explanation of these predictions is as follows. It should take about the same time to imagine the walks as to do them. This being the case, the computed ratios should be close to 1 (i.e., around 0.8 to 1.2). Imagining an activity should not be dependent on the detail of the imagined walk, but the actual distance. Therefore the two ratios should be about the same values.

However, it is likely that it took less time to imagine the walks as to do them, in which case your ratio values are likely to be less than 1 and closer to 0.5 or below. Furthermore, since a garden has more detail and hence more landmarks than an open field, it is likely that it took longer to imagine the walk in the garden than it did to imagine the walk in the field (since the latter has fewer landmarks). In this case, your Field Ratio is likely to be smaller than your Garden Ratio.

Ask yourself:

- Is mental imagery really like seeing with your eyes shut?

- Why should there be any discrepancy between the time it takes for an imagined walk and the time the real walk takes?

- Does everyone have mental images? And do we use them in the same way?

- How is that we can know whether we imagined something versus when we actually saw something?

- Is it possible to measure mental imagery objectively?

References

Kosslyn, S.M. (1980). Image and Mind. Cambridge, MA: Harvard University Press.

Kosslyn, S.M. (1994). Image and Brain: The resolution of the imagery debate. Cambridge, MA: MIT Press.

Flashcards

Quiz

Chapter 4

Case study

CASE STUDY: Gibson’s theory of direct perception affordances

DownloadThe concept of affordances, originally proposed in Gibson’s “information pickup” theory of perception, has received a great deal of attention (e.g., Norman, 1988). In this case study, we examine the details of this concept and discuss why it is important.

Gibson rejected the notion that the study of visual perception should focus on the physical properties of objects in the environment. Instead, he focused on the idea that, when people view an object, they do not see its physical, molecular structure but rather its function. In this way, Gibson held that trying to understand visual processes without reference to the way in which animals interact with their environments can lead to a false understanding. Gibson defined affordances as follows: “the affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill” (Gibson, 1979, p. 127). An affordance has three main properties:

1. An affordance exists relative to the action capabilities of a particular actor.

This means that an object can be perceived as having a certain use or function depending on the capabilities of the actor (the term “actor” is used to refer to the perceiver). Gibson used the example of a long, flat, rigid surface that can afford support for one person but may not afford support for another. Suppose you want to cross a muddy patch without getting mud on your shoes. You look around and there are several objects lying about. A plank of wood would afford support for you if it was the right size, was not porous and was strong enough to hold your weight. The same plank might not offer affordance to someone of a heavier weight. The point is that the concept of affordance is an interaction between the object and the perceiver’s capabilities. Another point is that, although the plank of wood may have been designed for something else, it still offers the affordance of support in this case.

2. The existence of an affordance is independent of the actor’s ability to perceive it.

This statement means that the affordance of an object is invariant and does not depend on it being perceived or detected. It is a property of the object and the current situation.

3. An affordance does not change as the needs and goals of the actor change.

This is a similar point to the previous one and emphasises the invariance of an affordance. It means that the affordances of an object are always present, even if the individual cannot detect them. In this way affordances are objective, in that they do not depend on interpretation, value or meaning. A plank can offer the affordance of support independent of the meaning or interpretation that the perceiver attaches to it. At the same time, without the presence of the perceiver, the affordance is meaningless. So, in the plank example, the plank’s affordances do not exist separately from the actor. In other words, an object’s affordance results from an interaction between it and the perceiver. Further, when an affordance exists and there is no physical barrier to its detection, direct perception is possible. This means an affordance can exist regardless of the individual’s experiences – for example, a hidden door in a wall panel affords the opportunity to move into another room, even though the person may not see it. However, detection of an affordance can depend on experience and culture (e.g., suspecting that there is a hidden door and finding it). The individual must learn to discriminate the information in order to detect affordances. Learning is seen as a process of discriminating between the patterns of information in the environment rather than as something that is used to supplement perceptual processes. A further point implied by Gibson’s theory is that affordances are binary – an affordance either exists or it doesn’t.

There has been some debate about the nature of affordances as described by Gibson. For example, Norman (1988) questions whether an affordance can ever exist outside perception. A designer, for example, may design a novel object whose function is immediately obvious to the user, and thus the designer and the user are using prior knowledge and experience.

McGrenere and Ho (2000) use the example of the design of a door to highlight the problems with Gibson’s and Norman’s views. Suppose there is a door with no handle and no flat panel. In order to know how to open the door, one would need previous experience with this type of door. According to Gibson’s definition, the fact that the door can be opened means that it has an affordance, even though the actor may have no idea how to open it. According to Norman, however, its affordance only exists when the user has prior knowledge of how to open it – the action possibility of the door needs to be conveyed to, or perceived by, the user. One implication of this is that an affordance may not be binary, as Gibson described. An object may have an action possibility, but this may be hard to detect (e.g., a hidden door) or hard to achieve (e.g., a flight of stairs that afford ascent but are very difficult to climb because they are covered in snow).

This debate is not just about two schools of thought nit-picking over details that are irrelevant in the real world. On the contrary, it has been extremely useful for designers wanting to develop “user-friendly” products and services (McGrenere & Ho, 2000). By studying the affordances of objects, designers can produce better designs. Gaver (1991) discusses how Gibson’s ideas emphasise the importance of two things about design: the real affordance of an object, such as what it is used for, and its perceived or apparent affordance, or the design that suggests an affordance. When the real and apparent affordances match, then the artefact is easy to use and the instructions for use can be at a minimum; when they mismatch, errors are common and extra instructions will be required.

References

Gaver, W.W. (1991). Technology affordances. CHI ’91 Conference Proceedings, New Orleans, Louisiana, 27 April–2 May, pp. 79–84.

Gibson, J. J. (1979). The Ecological Approach to Visual Perception. Boston, MA: Houghton Mifflin.

Green, J.G. (1994). Gibson’s affordances. Psychological Review, 101: 336–342.

McGrenere, J. & Ho, W. (2000). Affordances: Clarifying and evolving a concept. Proceedings of Graphics Interface 2000, Montreal, May 2000. Available online.

Norman, D.A. (1988). The Psychology of Everyday Things. New York: Basic Books.

Flashcards

Quiz

Chapter 5

Case study

CASE STUDY: Multi-tasking efficiency: serial or parallel processing strategy?

DownloadPerformance optimisation in multi-tasking is a controversial topic, sparking much debate around whether cognitive processes relate to different tasks are processed sequentially or in parallel.

One of the central aims that cognitive psychology and cognitive neuroscience attempts to address is the ability to understand, and optimise, the processes underlying multi-tasking to increase efficiency when dealing with multiple tasks. Many psychologists have argued that bottleneck – a process that limits our ability to process several simultaneous inputs – is the cause of the typical decline in performance, often seen in tasks requiring the participant to multi-task (Pashler, 1998). On the other hand, others have argued that parallel processing is possible by means of capacity sharing although serial processing may reflect the more efficient strategy for multi-tasking.

To fully understand this argument, it is important to understand the terms “parallel processing” and “serial processing”. When a task is carried out with another task (i.e., the two tasks are performed together), the individual might use serial or parallel processing. When an individual switches attention between two tasks – their attention moving backwards and forwards – with only one task being processed, this is known as serial processing. When individuals carry out two or more tasks at the same time, this typically results in severe performance costs – this means, the error rate is greater.

Early work on multi-tasking assumes that access to a single processing channel is ordered sequentially. For example, if Task A enters the capacity-limited processing stage, any additional task that is being processed at the same time as Task A (e.g., Task B), will not be further processed. This means that until Task A critical processing has finished, Task B’s processing will remain halted. Subsequently, serial task scheduling is the result of a capacity-limited processing bottleneck that is structural in nature (Broadbent, 1958). This has been a core view of the response-selected bottleneck (RSB) model (Pashler & Johnston, 1989). Therefore, the peripheral processing of dual tasks is carried out in parallel; in contrast, central processing is capacity-limited and so does not proceed in parallel (Pashler & Johnston, 1989).

The limitations on central processing, which cause dual-task costs, have been at the centre of the theoretical assumptions of experimental psychology for decades. Two-choice reaction-time tasks are presented with the stimuli of Task 1 (T1) and Task 2 (T2) varying in the time interval between their onset. The performance of Task 1 is unaffected by task overlap manipulation. However, Task 2 depends on the proximity of both tasks. The literature suggests that the shorter the gap between stimulus onset (for T1 and T2), the slower the reaction time and the higher the error rate seen in Task 2. This concept is known as the psychological refractory period (PRP). PRP has been widely used as a measure of dual-task cost; first developing at the capacity-limited response-selection stage in multi-tasking. It is important to note that the costs of carrying out two tasks simultaneously serves as a marker for multi-tasking efficiency (Fischer & Plessow, 2015).

Early researchers have identified two factors that determine the possibility of processing tasks in parallel: task similarity and task practice. Allport et. al. (1972) argued that multi-tasking costs do not result from exceeding the capacity of a single-channel processor but from the difficulty of separating two similar tasks. They demonstrated that when two dissimilar tasks are combined, processing is done in parallel to the same quality as single-task processing. Similarly, Shaffer (1975) showed skilled typists can easily perform copy typing with a verbal shadowing task in parallel yet fail in combining an audiotyping task with reading from a sheet (Halvorson & Hazeltine, 2015).

Several researchers believe that by adopting a parallel strategy the individual is benefitted in terms of dual-task efficiency. Miller and colleagues (2009) demonstrated that parallel processing can outperform serial processing, in terms of dual-task efficiency. They showed that when participants carried out tasks simultaneously, they produced patterns of parallel processing; increased reaction times for Task 1 with decreased reaction times for Task 2.

They also demonstrated that asynchrony manipulation prior to stimulus onset determined the efficiency of parallel and serial processing modes. Emphasis on Task 1 performance shows a favoured resource allocation that benefits the processing of Task 1; all resources are therefore, dedicated to Task 1. Consequently, this is an example of serial processing.

However, when specific priority instructions are disregarded, individuals freely choose a parallel processing strategy (Lehle & Hübner, 2009). Lehle and Hübner (2009) also demonstrated that parallel processing is associated with less mental effort. In their 2009 study (Lehle et al., 2009), 28 participants were tasked with employing a serial or parallel processing strategy. Parallel processing showed higher performance costs than serial processing, but serial processing was judged as more effortful. Therefore, although it may not be the most efficient way of multi-tasking, parallel processing reflects a less effortful processing strategy compared to a stricter serial processing, and, given the choice, participants tend to adopt the processing mode with the least mental effort (Kool et. al., 2010). Furthermore, it also suggests a compromise between optimisation in performance and minimising mental effort (Lehle et al., 2009).

While serial task processing appears to be the most efficient, multi-task processing is favoured by a parallel strategy. By adopting a more flexible and context-sensitive processing strategy, individuals will be able to adjust to environmental demands, providing mechanisms important for adaptive intelligent behaviour (Goschke, 2013).

References

Allport, A., Antonis, B. & Reynolds, P. (1972). On the division of attention: A disproof of the single channel hypothesis. Quarterly Journal of Experimental Psychology, 24, 225–235.

Broadbent, D.E. (1958). Perception and Communication. London: Pergamon Press.

Fischer, R. & Plessow, F. (2015). Efficient multitasking: Parallel versus serial processing of multiple tasks. Frontiers in Psychology, 6 (1366), 1–11.

Goschke, T. (2013). Volition in action: Intentions, control dilemmas and the dynamic regulation of cognitive intentional control. In W. Prinz, A. Beisert and A. Herwig (ed.), Action Science: Foundations of an emerging discipline (pp. 409–434). Cambridge, MA: MIT Press.

Halvorson, K.M. & Hazeltine, E. (2015). Do small dual-task costs reflect ideomotor compatibility or the absence of crosstalk? Psychonomic Bulletin Review,22 (5), 1403–1409.

Kool, W., McGuire, J.T., Rosen, Z.B. & Botvinick, M.M. (2010). Decision making and the avoidance of cognitive demand. Journal of Experimental Psychology General, 139, 665–682.

Lehle, C., and Hübner, R. (2009). Strategic capacity sharing between two tasks: Evidence from tasks with the same and with different task sets. Psychological Research, 73, 707–726.

Lehle, C., Steinhauser, M. & Hübner, R. (2009). Serial or parallel processing in dual tasks: What is more effortful? Psychophysiology, 46 (3), 502–509.

Miller, J., Ulrich, R. & Rolke, B. (2009). On the optimality of serial and parallel processing in the psychological refractory period paradigm: effects of the distribution of stimulus onset asynchronies. Cognitive Psychology, 58, 273–310.

Pashler, H. (1998). The Psychology of Attention. Cambridge, MA: MIT Press.

Pashler, H. & Johnston, J.C. (1989). Chronometric evidence for central postponement in temporally overlapping tasks. Quarterly Journal of Experimental Psychology, 41, 19–45.

Shaffer, L.H. (1975). Multiple attention in continuous verbal tasks, In P.M.A. Rabbitt and S. Dornic (eds), Attention and Performance V (pp. 157–167). New York: Academic Press.

Research activity

RESEARCH ACTIVITY: Skill acquisition and the power law

DownloadThe relationship between practice and performance in perceptual motor skills has been captured by the power law of practice. This law states that, if the time per trial and number of trials are graphed on log–log coordinate axes, then a straight line results. According to Logan (1988), the law also applies to cognitive skills. Several theories have attempted to explain the effects of practice. The question this activity attempts to address is whether the learning rates of a simple perceptual motor skill and a simple cognitive skill are acquired at the same rate when the learning strategy is similar.

Instructions: perceptual motor skill

Three juggling balls are required (about 3 or 4 cm in diameter), and a pen and paper to record progress. Draw two columns on the paper and list the first column as “Trials” and the second column as “Throws completed”. Number the rows in the trial column from 1 to 30. The aim of the exercise is to attempt to juggle the balls, with the goal of juggling ten complete throws, where one complete throw consists of moving one ball from one hand to the other by throwing and catching it without dropping any of the balls.

Learning method: how to learn to juggle

A note about throwing and catching: when you throw a ball from one hand to the other, throw upwards with some height. This will give you more time to prepare for catching. Also throw the ball slightly along, so that it moves towards the other hand (otherwise you will have to move your body towards it). You do not want to have to move your body to catch a ball, otherwise you will be out of position to catch the next ball.

- Begin by holding two balls in the left hand and one ball in the right hand.

- One complete throw. Throw one ball from the left hand to the right hand. This is one throw.

- If you successfully caught the ball in your right hand and did not drop any of the balls then proceed to step 4, otherwise go back to step 1 and record one throw completed for the trial.

- Hold two balls in the left hand and one ball in the right hand.

- Two complete throws. Throw one ball from the left hand to the right hand, but while the ball is in mid-air throw the ball in the right hand to the left hand. Catch both of the balls.

- If you successfully caught both balls and did not drop any, then proceed to step 7, otherwise go back to step 1 and record two throws completed for this trial.

- Hold two balls in your left hand and one in your right hand.

- Three complete throws. Throw one ball from the left hand to the right hand, but while the ball is in mid-air throw the ball in the right hand to the left hand. Catch the ball coming to your right hand and throw the ball in your left hand. Next, catch the ball coming to your left hand and catch the ball coming to your right hand.

- If you successfully caught all three balls and did not drop any, then proceed to step 10, otherwise go back to step 1 and record three throws completed for this trial.

- Hold two balls in your left hand and one in your right hand.

- Now, try for four complete throws, by throwing three as described above then repeating the first throw (left hand to right hand). If you make an error, go back to step 1 and record four for this trial, otherwise go to the next step.

- Repeat the process by holding the balls each time in the same starting position and increase your target to one extra throw if you have not made any errors in the previous throw. Each time you drop a ball you have to go back to step 1 and record the number of completed throws. Do this for 30 trials.

Instructions: learning to count in another language

You will need the numbers 1 to 20 from a language with which you have no experience printed in word form. Draw two columns on the paper and list the first column as “Trials” and the second column as “Number completed”. Number the rows in the trial column from 1 to 30. The aim of the exercise is to attempt to learn to count (by writing the numbers) in the foreign language, with the goal of counting to 20 without a single error.

The method of learning

In Spanish (for example) the numbers 1 to 20 are:

- uno

- dos

- tres

- cuatro

- cinco

- seis

- siete

- ocho

- nueve

- diez

- once

- doce

- trece

- catorce

- quince

- dieciséis

- diecisiete

- dieciocho

- diecinueve

- veinte

- Read the list of numbers from 1 to 20.

- Hide the numbers and write down the word for 1.

- Check your answer and if it is correct, go to step 4, otherwise score 0 for this trial and go to step 1.

- Hide the numbers and write down the words for 1 and 2.

- Check your answers and if they are perfectly correct, go to step 5, otherwise score 1 for this trial and go to step 1.

- Hide the numbers, and write down the words for 1, 2 and 3.

- Check your answers and if they are perfectly correct, go to step 8, otherwise score 2 for this trial and go to step 1.

- Hide the numbers, and write down the words for 1, 2, 3 and 4.

- Check your answers and if they are perfectly correct, go to step 10, otherwise score 3 for this trial and go to step 1.

- Continue this process by increasing the target number by 1 if you have made no mistakes. Each time you make a mistake, record the number you counted correctly to and go to step 1. Do this for 30 trials.

Dealing with the data

The first thing to do is to compute the log value of each trial number and the score for each trial. The log values for 1 to 30 are calculated in the table below (to two decimal places).

Number |

Log value |

1 |

0.00 |

2 |

0.30 |

3 |

0.48 |

4 |

0.60 |

5 |

0.70 |

6 |

0.78 |

7 |

0.85 |

8 |

0.90 |

9 |

0.95 |

10 |

1.00 |

11 |

1.04 |

12 |

1.08 |

13 |

1.11 |

14 |

1.15 |

15 |

1.18 |

16 |

1.20 |

17 |

1.23 |

18 |

1.26 |

19 |

1.28 |

20 |

1.30 |

21 |

1.32 |

22 |

1.34 |

23 |

1.36 |

24 |

1.38 |

25 |

1.40 |

26 |

1.41 |

27 |

1.43 |

28 |

1.45 |

29 |

1.46 |

30 |

1.48 |

The first step is to reproduce this table in a spreadsheet (such as a sheet in Microsoft Excel). Add a third column called “Motor score” and a fourth column called “Cognitive score” and insert the data gathered from both tasks.

Next, add a fifth column called “Log motor” and a sixth column called “Log cognitive” and enter the corresponding log values. You do not need to use a calculator for these, simply use the table for converting the scores to log values. For example, if on trial 8 you counted correctly to 5 then enter 0.7 as the data for this trial, i.e., from the table, log(8) = 0.7.

The next task is to plot the data on a graph, such as a spreadsheet scatter chart.

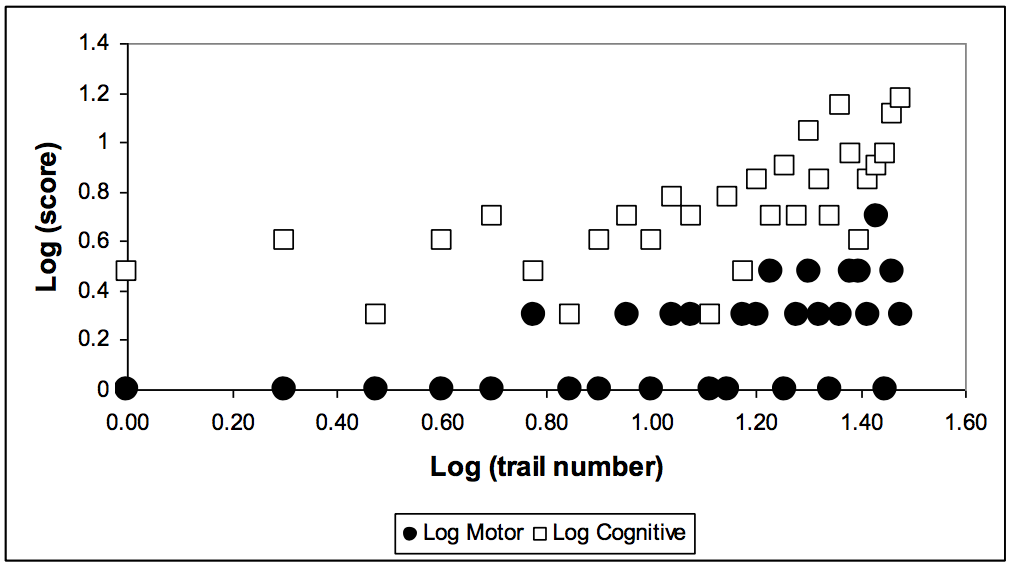

You should end up with a graph that looks something like this (a scatterplot of some example data):

Ask yourself:

- Do the points for the log values of the cognitive scores appear to conform to a straight line?

- Do the points for the log values of the motor scores appear to conform to a straight line?

- Which type of skill approximates more closely to a straight line?

- Does it make sense to compare cognitive and motor skill acquisition (when the tasks are very different)?

- Do you think that the acquisition of a different motor skill (e.g., riding a bicycle) would produce a different graph?

- Do you think that the acquisition of a different motor skill (e.g., learning to play noughts-and-crosses or tic-tac-toe) would produce a different graph?

- Can the power law of practice accommodate the phenomenon of insight?

Predictions

Ericsson’s theory of deliberate practice (e.g., Ericsson et al., 1993; Ericsson & Lehmann, 1996) was developed to explain expertise for cognitive skills. This theory suggests motor skills, such as juggling, should be acquired at a different rate from cognitive skills (and hence cognitive skills may not conform to the power law of practice). However, several other theories, for example Anderson (1993), predict that they should both be acquired according to the power law of practice. In terms of the current activity, which one is right?

References

Anderson, J.R. (1993). Rules of the Mind. Hillsdale, NJ: Lawrence Erlbaum Associates.

Ericsson, K.A. & Lehmann, A.C. (1996). Expert and exceptional performance: Evidence of maximal adaptations on task constraints. Annual Review of Psychology, 47, 273–305.

Ericsson, K.A., Krampe, R.T. & Tesch-Römer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychological Review, 100, 363–406.

Logan, G.D. (1988). Toward an instance theory of automatization. Psychological Review, 95, 492–527.

Flashcards

Quiz

Chapter 6

Case studies

CASE STUDY: The revised model of working memory – the episodic buffer

DownloadBaddeley and Hitch (1974) proposed the model of working memory with three components: the visuo-spatial sketchpad, the phonological loop and the central executive. This model has been successful in accounting for a broad range of data from laboratory studies of immediate recall, as well as data obtained from neuropsychological, developmental and neuroimaging studies. However, more recently, Baddeley (2000) has added a fourth component to the model, the episodic buffer, which is said to play a role in the processes of integrating information between the subsystems and the central executive. Moreover, it is said to be comprised of a limit capacity system that provides the temporary storage of information from the secondary systems and from the long-term memory system in a single episodic representation (Baddeley, 2018).

As successful as the earlier formulation of the model has been, Baddeley concedes “there have always been phenomena that did not fit comfortably within the Baddeley and Hitch model” (p. 417). In particular, there are data that are problematic for the phonological loop.

Articulatory suppression

For example, in studies of articulatory suppression, in which the participant repeats the word “the” while learning a visually presented list of numbers, the model predicts that recall will be poor. This is predicted based on the assumptions that (1) visual information has indirect access to the phonological loop (via subvocal rehearsal) and (2) articulatory suppression should prevent visual information from gaining access to the phonological loop. However, the data show that articulatory suppression only results in a small reduction in recall, typically from seven to five digits (Baddeley et al., 1984). In addition, in studies of brain-damaged patients, individuals with impaired short-term memory show better recall for visually presented digits than for auditorily presented digits. The older version of the model is unable to account for these findings, not even by including an explanation based on the visuo-spatial sketchpad. This structure is assumed to capture a single representation of a complex pattern and to be poor at storing serial information.

Recall of prose

When participants are presented with a list of unrelated words, recall is limited to about five or six items. However, when words are related (as in prose) correct recall can be increased to 16 or so items (Baddeley et al., 1987). A recognised explanation for this is that prose utilises information in long-term memory, which has the effect of reducing the amount of information needed to be recalled into smaller chunks, whereas unrelated words do not. Chunks of information are not represented easily in the older version of the model, since it is not a function of the phonological loop, nor do chunks reside in long-term memory. Similarly, in studies of the recall of prose, typical recall is of about 15 to 20 idea units. This amount exceeds the capacity of the phonological loop; it cannot be accounted for by the storage of a pattern in the visuo-spatial sketchpad; and the central executive is assumed to have no storage capacity at all. Retention of this amount of information seems to involve structures within long-term memory.

Subvocal rehearsal

A key component of working memory is that there are separate processes for storage and retrieval. Recall is said to be achieved through subvocal rehearsal of the to-be-remembered items. Support for this comes from the finding that articulatory suppression does not affect the word-length effect (a list of long words is more difficult to recall than a list of short words) when items are presented auditorily, yet it does when items are presented visually. In the case of visual presentation, items cannot be rehearsed subvocally and hence recall is independent of the length of the word. However, what is problematic for the theory is that, although children only begin to show signs that they use subvocal rehearsal by the age of 7, some form of rehearsal occurs in children as young as 3 years old. Such rehearsal cannot be explained by the function of the visuo-spatial sketchpad.

The binding problem

Every object has several features, such as its physical shape and size, its location, its colour, whether it is moving and so on. When we perceive more than one object, in most cases we correctly associate the right features with the right object. The binding of an object to its associated features, which is assumed to take place in the brain, serves to avoid incorrect combinations of features of objects. It is not clearly understood how binding is achieved. Baddeley has suggested that working memory plays an important role in binding through the role of the central executive, since the model is essentially concerned with the integration of information from more than one modality. However, since the central executive has no “short-term multi-modal store capable of holding such complex representations” (Baddeley, 2000, p. 421), it is inadequate in explaining the binding problem.

The episodic buffer

The solution to the problems of the previous formulation of working memory is the episodic buffer, which has the following features:

It is of limited capacity.

It is a temporary storage system.

It can integrate information from a variety of sources (perception and long-term memory).

It is controlled by the central executive through conscious awareness.

It holds episodes of information that are across space and over time.

It plays an important role in the storage and retrieval of episodic long-term memory.

It may reside in the right frontal areas.

In sum, the episodic buffer takes the form of a short-term episodic memory. Whereas the previous formulation of the model focused on separating out the individual components of working memory, the emphasis of the new model is now on the integration of information. It is also suggested that the episodic buffer forms “the crucial interface between memory and conscious awareness” (Baddeley, 2000, p. 422).

References

Baddeley, A.D. (2000). The episodic buffer: A new component of working memory? Trends in Cognitive Science, 4 (11): 417–423.

Baddeley, A.D. (2018). The episodic buffer: A new component of working memory? In A.D. Baddeley (ed.), Exploring Working Memory: Selected works of Alan Baddeley (pp. 297–311).London: Routledge.

Baddeley, A.D. & Hitch, G.J. (1974). Working memory. In G.H. Bower (ed.), The Psychology of Learning and Motivation: Advances in research and theory (pp. 47–89). London: Academic Press.

Baddeley, A.D., Lewis, V. & Vallar, G. (1984). Exploring the articulatory loop. Quarterly Journal of Experimental Psychology, 36: 233–252.

Baddeley, A.D., Vallar, G. & Wilson, B.A. (1987). Sentence comprehension and phonological memory: Some neurophysiological evidence. In M. Coltheart (ed.), Attention and Performance XII: The psychology of reading (pp. 509–529). Hove, UK: Lawrence Erlbaum Associates.

CASE STUDY: Automatic processes, attention and the emotional Stroop effect

DownloadThe Stroop effect (Stroop, 1935) occurs when participants are required to name the colour in which a set of words are printed:

- In the congruent condition, the words are printed in the same colour as the word itself (e.g., the word blue printed in blue ink or the word red printed in red ink).

- In the incongruent condition, the word and the colour in which it is printed differ (e.g., the word blue printed in red ink).

As you might predict, participants are slower to name the ink colours in the incongruent condition than in the congruent condition. The reasoning is that reading the words, as an automatic process, cannot be avoided, and this interferes with naming the colours.

In the emotional Stroop task (e.g., Williams et al., 1996), participants are required to name the ink colour of words, just as in the Stroop task, however now the words are either emotional words (especially threatening words, such as kill) or neutral words. When the participants used in this study had high levels of trait anxiety (that is, they had a long-term disposition towards being anxious, though not necessarily clinically anxious), they were slower to name the colour of the emotional words compared to the neutral words, and slower than those participants with low levels of trait anxiety.

Since the slowed responses in the Stroop task are thought to be due to the automatic processing of the words, it is not inconsistent to suppose that the slowed responses of individuals with high levels of trait anxiety on the emotional Stroop task are due to the automatic processing of emotional information.

In a study by Dresler et al. (2009), arousal was found to determine emotional inference independent of valence. These words were better recalled and recognised, more so than neutral words. The determined individual differences in anxiety states were associated with emotional interference, where emotional interference was more enhanced in individuals with a high state of anxiety. They concluded that word arousal produces emotional interference independent of valence, suggesting that a state of anxiety exacerbates interference of emotional words by biasing attention towards emotionally salient stimuli.

Studies such as this have raised the issue of whether there is an attentional bias towards threat in individuals with raised levels of anxiety, and whether such biases are automatic (in the sense that they occur rapidly and without voluntary intent). If you are afraid of spiders, you may have first-hand experience of this idea. Imagine you are in the attic looking for some old photographs and you notice something out of the corner of your eye. Given that it’s an attic, full of old cobwebs, you would be forgiven for assuming that the movement in the corner of your vision is a spider! Someone without a fear of spiders might either (a) not even notice the movement or (b) assume that it was something else.

This example demonstrates what has been found in people who are prone to anxiety – they tend to notice potential dangers and threats more easily and much sooner than people who are less prone to anxiety. Clearly, there is a payoff for the anxious person who notices a threat sooner rather than later: they can take evasive action before being harmed. However, anxious people often make the error of thinking that something is dangerous or threatening when it is harmless. This rapid switching of attention towards threat has been observed in the laboratory in anxious students as well as in people diagnosed with an anxiety disorder.

To further support this concept, event-related potentials (ERPs) of individuals have been examined. ERPs are patterns of electroencephalograph (EEG) activity obtained by averaging the brain responses to the same stimulus (or very similar stimuli) presented repeatedly. A study by Metzger and colleagues (1997) used ERPs to investigate the emotional Stroop effect in individuals with post-traumatic stress disorder (PTSD). Results indicated those with PTSD had a slower reaction time when naming word colours, particularly traumatic words, indicating a processing bias towards trauma-related information in PTSD. These results were accompanied by significantly delayed and reduced P3 components – an ERP component elicited in the process of decision-making that links to a participant’s reaction to a stimulus – across all word types, suggesting Stroop interference was not related to differences in attention-related processing of trauma- versus non-trauma-related words.

On the other hand, there are inferences within the literature that suggest ERPs are a more sensitive measure of attentional biases than reaction time measures (Thomas et al., 2007). Several ERP studies on healthy individuals have found larger ERP amplitudes in response to emotional stimuli relative to neutral stimuli – and in emotionally negative stimuli relative to emotionally positive stimuli. This suggests that preferential processing is evident when examining ERP amplitudes rather than reaction time measures.

Bernat et al. (2001) used undergraduates who were required to watch words that appeared on a computer screen and found that unpleasant words elicited more positive amplitudes than pleasant words across all ERP components. P100, N1 and P200 are potentials that have been identified with visual processing, while P300 is an ERP component elicited in the process of decision-making. Weinstein (1995) found no reaction time differences between the performances of low- and high-anxiety individuals, but showed larger N100 (a visual or auditory ERP component) and P400 (an ERP response to words and other visual stimuli) ERP amplitudes for those individuals with high anxiety.

Overall, there are suggestions within the literature that indicate that larger ERP amplitudes to negative emotional stimuli are a demonstration of sensory and cognitive component activation when using both pictorial and word stimuli, and in the absence of any observed differences behaviourally. These patterns have been interpreted as an indication of adaptive “negativity bias” – an attentional bias that prioritises the processing of negative over mundane stimuli occurring within the general population (Carretie et al., 2001). It is concluded that ERPs are a sensitive measure of processes underlying emotional Stroop performance, which can be used to elucidate attentional biases in healthy and clinical populations (Thomas et al., 2007).

In many of these experiments, highly anxious individuals have been found to direct their attention towards items related to personal threat (e.g., words related to health, or photographs of mutilation) when these items appear alongside more neutral items. For example, when a smiling face and an angry face are presented at the same time on a computer screen, the anxious individual looks at the angry face first.

Two words are presented on the screen, one on the left side and the other on the right side – one word is a threatening word (e.g., dagger) and the other word is a non-threatening word (e.g., number). Suppose dagger is presented to the left and number to the right. An anxious person would orient towards the word dagger, while a low-anxiety person would treat each word equally. The probe is then presented on the screen in the middle of the word dagger (e.g., dag*ger). If the participant is looking at dagger, then they will be able to respond sooner than if they had been looking at the word number. In this way it is possible to infer the direction of attention by use of a probe and reaction time.

Apart from the emotional Stroop task, another much-used task is the “dot probe” method for measuring attention, as devised by MacLeod and Mathews (1988). The idea is that a probe, such as an asterisk (*), is presented on a computer monitor, and the participant is required to press a button as soon as he or she sees it appear. Now, if the person just happens to be looking in the place where the probe appears, then they will respond faster than if they had been looking in a different area of the screen. Therefore, we can measure the reaction time of the button press, and it will be related to where the person was looking and where the asterisk appeared.

Specificity of attentional bias

Research has shown that connotations of what an anxious person fears most attracts the most attention. As examples:

- People with a spider phobia attend to words such as hairy or creepy.

- People with a social phobia attend to words related to socialising (e.g., party).

- People with an eating disorder attend to words related to food (e.g., chocolate).

- Anxious students tested just before their exams have been shown to attend to words related to success and failure (e.g., error).

The tendency for anxious individuals to perceive a threat does not seem to depend on conscious awareness or deliberate intent, as attentional bias has been found to exist even for stimuli that cannot be reported, such as when the words are presented subliminally (e.g., Mogg et al., 1993). It may be, therefore, a behaviour that is difficult to control or suppress (and this then has implications for therapy).

In contrast, research has suggested that individuals with generalised anxiety disorder (GAD) show an attention bias for threatening information that is relevant (Amir et al., 2009). In a study by Amir et al. (2009), participants were required to complete a probe detection task by identifying letters (E or F) replacing one member of a word pair. Attention was trained by the inclusion of a contingency between the probe location and the non-threatening word in the group. Participants in the attention modification programme (AMP) showed change in attention bias and a decrease in anxiety. These effects were not present in the attention control condition. These results are consistent with the notion that attention plays a causal role in the maintenance of GAD, further suggesting that altering attention mechanisms may effectively reduce anxiety.

References

Amir, N., Beard, C., Burns, M. & Bomyea, J. (2009). Attention modification program in individuals with generalised anxiety disorder. Journal of Abnormal Psychology, 118 (1), 28–33.

Bernat, E., Bunce, S. & Sherin, H. (2001). Event-related brain potentials differentiate positive and negative mood adjectives during both supraliminal and subliminal visual processing. International Journal of Psychophysiology, 42 (1), 11–34.

Carretie, L., Martin-Loeches, M., Hinojosa, J.A. & Mercado, F. (2001). Emotion and attention interaction studied through Event-Related Potentials. Journal of Cognitive Neuroscience, 13 (8), 1109–1128.

Dresler, T., Mériau, K., Heekeren, H.R. & Van Der Meer, E. (2009). Emotional Stroop task: Effect of word arousal and subject anxiety on emotional interference. Psychological Research PRPF, 73 (3), 364–371.

MacLeod, C. & Mathews, A. (1988). Anxiety and the allocation of attention to threat. The Quarterly Journal of Experimental Psychology,40A, 653–670.

Metzger, L.J., Orr, S.P., Lasko, N.B., McNally, J. & Pitman, R.K. (1997). Seeking the source of emotional Stroop interference effects in PTSD: A study of P3s to traumatic words. Integrative Physiological and Behavioral Science, 32 (1), 43–51.

Mogg, K., Bradley, B.P., Williams, R. & Mathews, A. (1993). Subliminal processing of emotional information in anxiety and depression. Journal of Abnormal Psychology, 102(2), 304–311.

Stroop, J.R. (1935). Studies of interference in serial verbal reaction. Journal of Experimental Psychology, 18 (6), 643–662.

Thomas, S.J., Johnstone, S.J. & Gonsalvez, C.J. (2007). Event-related potentials during an emotional Stroop task. International Journal of Psychophysiology, 63 (3), 221–231.

Weinstein, A.M. (1995). Visual ERPs evidence for enhanced processing of threatening information in anxious university students. Biological Psychiatry, 37, 847–858.

Williams, J.M., Mathews, A. & MacLeod, C. (1996). The emotional Stroop task and psychopathology. Psychological Bulletin, 120, 3–24.

Research activity

RESEARCH ACTIVITY: Phonemic similarity

DownloadAccording to Alan Baddeley (1986, 1990), the phonological loop was theorised, in part, to account for the finding that information in short-term memory is coded phonemically. For example, when seeing a list of words, mistakes in recall are often based on the sounds of the words; that is, mistakes based on the phonemic structure of the word. To illustrate this for yourself, try the following exercise (which is crudely based on an experiment in Baddeley, 1966).

Instructions

Print the table below and then fold the piece of paper in half vertically – folding it outwards not inwards. On the left are your test words; put your answers on the right-hand side. The aim is to recall the sequence of letters in the exact order in which you read them. For example, if the test items are q w e r t y u i, then you would write down q w e r t y u i. Read the first six letters of row 1 quietly to yourself once and then immediately turn the page over and write down your answers. Try not to cheat because you are not testing your own ability but your (theoretical) “phonological loop”. After you have completed the task, follow the procedure for scoring your responses.

1 |

H |

S |

F |

Q |

B |

C |

D |

P |

|||||||||||

2 |

G |

D |

B |

T |

Z |

H |

R |

S |

|||||||||||

3 |

Q |

M |

Z |

R |

C |

P |

D |

G |

|||||||||||

4 |

T |

P |

C |

B |

F |

M |

Q |

Z |

|||||||||||

5 |

F |

R |

Z |

S |

B |

P |

G |

T |

|||||||||||

6 |

C |

G |

B |

P |

M |

Q |

H |

R |

|||||||||||

7 |

H |

Q |

R |

M |

C |

T |

D |

B |

|||||||||||

8 |

D |

P |

T |

C |

R |

Z |

F |

H |

|||||||||||

9 |

M |

R |

H |

Z |

C |

G |

B |

T |

|||||||||||

10 |

B |

T |

G |

P |

R |

F |

M |

S |

|||||||||||

Total for even rows: |

|||||||||||||||||||

Total for odd rows: |

|||||||||||||||||||

Total of values in bold boxes |

|||||||||||||||||||

Total of values in normal boxes |

|||||||||||||||||||

Scoring

- Place a small circle around items that are incorrect. For example, if I wrote q w e r y u t i as my answer to the first example, then I would mark this by placing a circle around y, u and t (as although y and u were in the original list, they are in the wrong order).

- For each column, add up the number of circled items for the even rows.

- For each column, add up the number of circled items for the odd rows.

- Add the totals in the tinted boxes together (this value is the total number of errors with letters that rhyme: B, C, D, G, P, T).

- Add the totals in the normal boxes together (this value is the total number of errors with letters that do not rhyme: H, F, M, Q, R, S, Z).

- Compare the two totals.

Prediction

Baddeley’s theory of phonological encoding predicts that you should have a greater number of errors for letters that rhyme than for letters that do not rhyme, even though you have not verbalised the letters during learning.

Example:

Total for even rows: |

0 |

1 |

1 |

0 |

0 |

1 |

1 |

1 |

|

Total for odd rows: |

0 |

0 |

0 |

0 |

1 |

2 |

2 |

1 |

|

Total of values in bold boxes |

8 |

||||||||

Total of values in normal boxes |

3 |

||||||||

In this example more errors were made on trials with rhyming words than on trials with non-rhyming words. There also appears to be a strong primacy effect.

Ask yourself:

- Is there a primacy or recency overall effect in your data?

- If so, how might this affect the prediction of the theory?

- Did you find yourself trying to rehearse the items before you wrote them down?

- What other learning strategies did you use?

- Do you think the results would be any different if you repeated the letters aloud during learning?

- Try testing a friend. Do they get similar results? If you add your data together, does a pattern emerge?

References

Baddeley, A.D. (1966). The influence of acoustic and semantic similarities on long-term memory for word sequences. Quarterly Journal of Experimental Psychology, 18, 302–309.

Baddeley, A.D. (1986). Working Memory. Oxford: Clarendon Press.

Baddeley, A.D. (1990). Human Memory: Theory and practice. Hove, UK: Psychology Press.

Flashcards

Quiz

Chapter 7

Case study

CASE STUDY: Amnesia and long-term memory: the case of H.M.

DownloadAmnesia is characterised by memory impairment in patients who have intellectually intact functions and intact immediate memory span (Sanders & Warrington, 1971). Some of the central components for the syndrome include defective learning and retention of ongoing events. Another consistent research finding is memory loss for events before the onset of the illness, in retrograde amnesia.

There have been several cases studying memory following brain damage. H.M. is one of the most notorious cases in neuroscience. He was the first patient to undergo neurosurgery to attempt to cure his seizures, unfortunately the surgery left him with severe memory impairment. The study of H.M. led to insight on how the brain can reorganise itself following damage.

As well as intact and perceptual functions, despite having a large medial temporal lobe (MTL) lesion, H.M. had a significant capacity for sustained attention, including the ability to retain information for a period of time long after it was presented (Squire, 2009). It also appeared that H.M. exhibited intact digit span. This information remained intact so long as it could be actively maintained by rehearsal. H.M. could retain a three-digit number for 15 minutes by continuous rehearsal; organising the digits according to a mnemonic scheme he created. However, when his attention was centred on a new topic, the whole event was forgotten.

In contrast, when the material was hard to rehearse, it was found that his recall ability was significantly worse, and information was forgotten in less than a minute. These key findings have supported fundamental distinctions made between immediate memory and long-term memory (LTM), suggesting damage to the medial temporal lobe can inhibit long-term memory learning and recall (Baddeley & Warrington, 1970). Furthermore, the distinction between these types of memory has been a key concept in psychological research in understanding how the brain organises its memory functions (Squire, 2009).

Additional insight from work on H.M. arose in terms of multiple memory systems. Given his profound and global memory impairment, H.M.’s visuo-motor skills were spared; when he was asked to draw a five-pointed star, which was shown as a reflective mirror image, his ability to do so was excellent, and he could retain this information over three days. However, at the end of testing, H.M. had no recollection of having done the task before (Milner, 1962). This finding provided evidence to suggest that there is more than one memory system, and some kinds of memory lie outside the MTL province.

Despite wide acceptance of motor skills as a special case, it later became apparent that motor skills are but a subset of a larger domain of skill-like abilities, all of which are preserved in amnesic patients. This ability to preserve learned perceptual skills (of mirror reading) suggested there is a distinction between two classes of knowledge: declarative and procedural (Cohen & Squire, 1980). Consequently, it was concluded that H.M. had a declarative memory impairment, which is entirely dependent on the MTL. The finding that memory is not a single faculty of the mind, ultimately led to the identification of a multiple memory system.

A key insight from studying H.M.’s memory came from a consideration of his capacity to remember information that he had acquired before surgery. First, H.M.’s ability to recognise faces he might have seen in the news during the 1950s and 1960s was just as good as, if not better than, age-matched controls. This implied that the MTL is not the sole unit for storage of previously acquired knowledge. Subsequently, this led to interest in autobiographical memory: the access of specific memories and the level of detail at which they can be recollected. Findings from Corkin (1984) showed that H.M. could produce well-formed autobiographical memories from the age of 16 and younger. As H.M. aged, the situation changed. In his later life (76 years old), his memories were more of a factual recall than memories of specific episodes. Moreover, it was found that he could not narrate any single event occurring at a specific time and place. It was concluded that memory for autobiographical events is highly dependent on the MTL, so long as the memories persist.

There have been a few contradictions from researchers more recently. In 2002–2003, MRI scans were taken of H.M.’s brain (Salat et al., 2006). The scans documented new changes since H.M.’s first MRI scans (1992–1993). These new findings included cortical thinning, subcortical atrophy, abnormal white matter and subcortical infarcts. These new findings complicated neuropsychological interpretations collected during the same timeframe. Another consideration is that remote memories may have been intact during the early years following surgery, but then could have faded with time. This would be a result of an inability to be strengthened via rehearsal and relearning.

It was concluded, overall, that memories from early life remain intact unless the damage sustained extends into the lateral parts of the temporal lobe and even the frontal lobe. The structures damaged in H.M. are interpreted as important in the formation of LTM and its maintenance for a period of time after learning. During this period, it is thought that gradual changes occur in the neocortex (responsible for memory consolidation) that increase the complexity, distribution and connectivity among multiple cortical regions. Thus, it has been suggested that, over time, memory is supported by the neocortex and not the MTL.

H.M. sparked and motivated an enormous amount of research, both in humans and animals, on the topic of remote memory, and still, today, continues to stimulate discussion about the nature and significance of retrograde amnesia.

References

Baddeley, A.D. & Warrington, E.K. (1970). Amnesia and the distinction between long- and short-term memory. Journal of Verbal Learning and Verbal Behaviour, 9 (2), 176–189.

Cohen, N.J. & Squire, L.R. (1980). Preserved learning and retention of pattern-analysing skill in amnesia: Dissociation of knowing how and knowing that. Science, 210 (4466), 207–210.

Corkin, S. (1984). Lasting consequences of bilateral medial temporal lobectomy: Clinical course and experimental findings in H.M. Seminars in Neurology, 4, 252–262.

Milner, B. (1962). Physiologie de l’hippocampe. In P. Passouant (ed.), Centre National de la Recherche Scientifique (pp. 257–272). Paris: Centre National de la Recherche Scientifique.

Sanders, H.I. & Warrington, E.K. (1971). Memory for remote events in amnesic patients. Brain, 94, 661–668.

Salat, D.H., van der Kouwe, A.J.W., Touch, D.S., Quinn, B.T., Fischl, B., Dale, A.M. & Corkin, S. (2006). Neuroimaging H.M.: A 10-year follow-up examination. Hippocampus, 16, 936–945

Squire, L.R. (2009). The legacy of patient H.M. for neuroscience. Neuron, 15 (6), 6–9.

Research activity

RESEARCH ACTIVITY: Word-stem completion task

DownloadFor this exercise it is important that you follow the instructions carefully and that you are NOT tempted to scroll forward at any point.

Phase 1

Instructions: Please read the following text through once only:

The house stands on a hill overlooking the waters of the river Agua. Its occupants are a city broker and his friend, both soon to retire. They both share an interest in this region of Spain, having spent many a joyful holiday here. Just six more months of work back home and they will be out here for a good amount of time each year, rather than the odd weekly visit. They like the peace and the slow pace of life here compared to the hustle and bustle of central London, where they have lived for the past 20 years. The cost of living is also much better here and their pension will go much further.

Phase 2

Below are 12 word stems, each beginning with three letters, with the last three letters missing. Your task is to complete the words to make real words, but you must NOT use any word that was present in the previous text. DO NOT cheat or reread that text! Write out your answers on a spare piece of paper.

STA _ _ _

WAT _ _ _

FRI _ _ _

RET _ _ _

REG _ _ _

JOY _ _ _

MON _ _ _

AMO _ _ _

RAT _ _ _

BUS _ _ _

LIV _ _ _

BET_ _ _

Once completed, go to the next phase

Phase 3

Your next task is to study the following 12 words for 2 minutes.

Detour

Thrown

Entail

Trance

Locate

Potent

Series

Tender

Ration

Leaves

String

Beaker

After 2 minutes have passed, go to the next phase.

Phase 4

Below are 12 word stems, each beginning with three letters, with the last three letters missing. Your task is to complete the words to make real words, but you must NOT use any word that was present in the previous list. DO NOT cheat or reread that list! Write out your answers on a spare piece of paper.

DET _ _ _

THR _ _ _

ENT _ _ _

TRA _ _ _

LOC _ _ _

POT _ _ _

SER _ _ _

TEN _ _ _

RAT _ _ _

LEA _ _ _

STR _ _ _

BEA _ _ _

You may now check your answers. In Phase 2, score 1 point for each word you completed that is contained in the text passage. Also, for Phase 4, score 1 point for each word you completed that exists in the list.

Rationale: What is this activity about?

Jacoby et al. (1993) devised a method to tease apart the two influences of conscious and non-conscious processes in memory. The assumption is that both processes are involved separately during learning, and that if conscious processes are inhibited then the result on some recall tasks must be the influence of non-conscious processing.

The stem-completion task you carried out above is usually conducted under four conditions. The stimuli can be presented in conditions in which attention is either highly focused (optimal condition) or only partially focused (suboptimal condition) on the words presented. The task carried out is stem completion – either trying to complete the stems using the words presented (inclusion task), or trying to complete them by NOT using the words presented (exclusion task). The four conditions are shown in the table below.

Word presentation |

||

Optimal |

Suboptimal |

|

Inclusion |

Complete word stems to make previously seen words Correct inclusion implies conscious retention of the words |

Complete word stems to make words previously shown suboptimally, such as while doing a distractor task or words presented very briefly Correct inclusion implies non-conscious retention of the words |

Exclusion |

Complete word stems but don’t use any word shown previously Correct exclusion implies conscious retention of the words |

Complete word stems but don’t use any word shown previously at suboptimal levels, such as while doing a distractor task or words presented very briefly Incorrect exclusion implies non-conscious retention of the words |

Note that the key condition is suboptimal exclusion. If participants complete word stems using the suboptimally presented words when instructed NOT to use those words, then this implies that a non-conscious or implicit process is at work during the task.

Phases 1 and 2 above were designed to be an exclusion task with a suboptimal prime. Since the words were embedded within a story, it is likely that you did not pay particular attention to individual words, but rather to the overall meaning of the text. Therefore the presentation of the target words could be considered suboptimal. In contrast, Phases 3 and 4 represent an exclusion task with an optimal prime. The words were presented individually, and you were given a sufficient amount of time to attend to them.

Your data

Score your work for Phases 1 and 2, and then Phases 3 and 4. Give yourself 1 point each time you completed a word stem by using a word previously shown (that is, when you failed to exclude a given word). Ignoring any word stem that you could not complete, calculate the percentage of exclusion failures for each task. Thus, if in Phases 1 and 2 you failed to exclude two words in the task, correctly excluded six words in completing the word stems, but could not complete the word stem in two cases, then your percentage score would be 2/6, or 33%.

Predictions

Explicit and implicit memory systems are both thought to be in operation during learning. The exclusion–inclusion task devised by Jacoby et al. (1993) is one way in which the relative contribution of either process may be isolated. In line with previous research, the predictions of the data obtained in this exercise are that: (1) incorrect exclusions with the optimal prime (Phases 3 and 4) should be lower than those with the suboptimal prime (Phases 1 and 2); and (2) there should be some failures to exclude with the suboptimal prime – this is because of your implicit, non-conscious processing of the target words.

Questions

- If you failed to exclude in the suboptimal condition, can you think of a reason why that might not involve the concept of implicit memory?

- One criticism of this approach is that participants use a generate-and-test rule. That is, they generate a possible stem completion and then test their memory to see if it was in the list. This might mean that explicit processes are not independent of implicit processes. Did you use the generate-and-test method?

- Can you think of other ways of presenting words suboptimally?

- What do you think are the key factors (about the way the stimuli could be presented suboptimally) that might be important when someone fails to exclude an item?

- What might cause a failure to exclude in the optimal condition? Could a failure to exclude in the suboptimal condition be explained in the same way?

Reference

Jacoby, L.L., Toth, J.P. & Yonelinas, A.P. (1993). Separating conscious and unconscious influences of memory: Measuring recollection. Journal of Experimental Psychology: General, 122, 139–154.

Flashcards

Quiz

Chapter 8

Case study

CASE STUDY: Cognitive interview, eyewitness confidence and recall in older adults